Legislative and enforcement developments concerning artificial intelligence (AI) have received considerable attention recently: first, the data protection authority procedure initiated in Italy concerning ChatGPT (which also resulted in the temporary suspension of the service in Italy) received a lot of attention, and then, the approval of the draft EU AI Act by the competent committees of the European Parliament may have attracted more widespread attention.

On 11 May, the competent committees of the European Parliament voted in favour of the EU's draft regulation on artificial intelligence, so the vote will soon (expected in mid-June) also take place in the plenary session of the European Parliament. If the European Parliament's plenary approves the draft, the next step could be conciliation between Parliament and the Council, or - informal - trilogue, when Parliament, the Council and the Commission negotiate to adopt the final version of the legislation. The text negotiated by the parties can then be finally adopted. The final AI Act is expected to be adopted in the first half of 2024. (The text approved by parliamentary committees is available here.)

It is important to point out that the planned artificial intelligence regulation is not based on one pillar, i.e. it is not based solely on the application of data protection rules in connection with AI, but takes into account many more aspects. However, it is also worth noting that, in the absence of AI-specific rules, the application of data protection rules (primarily, the requirements set out in the GDPR) to AI-based technologies plays a prominent role for the time being (see the events surrounding ChatGPT). Although the suspension of ChatGPT in Italy was perhaps the first data protection procedure involving AI to attract the attention of the wider public (also outside Italy), due to the high interest surrounding ChatGPT, it was by no means the first time that AI-based data processing has been targeted by a data protection authority. Below I have collected cases (basically from the EU) that examined the application of artificial intelligence in a data protection context.

1. ChatGPT

The Italian procedure concerning ChatGPT, which is still ongoing, has received the most attention so far, but other EU authorities (e.g. the French and Spanish data protection authorities) have also taken a keen interest in ChatGPT, and the European Data Protection Board has also set up a task force "to foster cooperation and to exchange information on possible enforcement actions conducted by data protection authorities".

The main issues raised so far in connection with ChatGPT (mainly due to actions and press releases from the Italian authority) are:

- legal basis for data processing,

- information and transparency,

- the exercise of data subject's rights,

- protection of children.

2. Replika

Another case related to Italy and a chatbot from February 2023, when Garante (the Italian authority) decided to block a chatbot called Replika. The AI-based application, which promises a "virtual friend", was temporarily banned in view of protecting children and other vulnerable groups.

From the data protection perspective, the DPA primarily found that the data controller failed to comply with the following requirements:

- providing an adequate legal basis for data processing (the contractual legal basis pursuant to Article 6(1)(b) of the GDPR was found inadequate by the authority),

- transparency, information obligation,

- age-verification measures.

Further developments in this case can be expected, as the decision on suspension in February 2023 did not mean the end of the proceedings, but only served to protect those highly affected (especially those belonging to vulnerable groups) and the investigation is ongoing.

3. Clearview AI

Clearview AI is an AI-based application that quite regularly pops up in the news and whose very name indicates its artificial intelligence-based operation. Clearview AI develops a facial recognition system and processes a huge amount of personal data (including facial images collected from the Internet). The company often sells its services to law enforcement agencies. (For more information on the company's activities, see the New York Times' 2020 report and BBC's recent article focusing on the use of Clearview AI's product by the police, which also reveals that the company collected 30 billion (!) images from social media and other public sources, without the permission of those involved.)

The operation of Clearview AI has been accompanied by several data protection authority procedures in recent years:

- Hamburg, Germany (2020, 2021): fine of €10,000 for failure to cooperate properly with the authority (see annual report of the Hamburg Data Protection Authority, pp. 105-107) or decision to delete data in an individual case,

- Italy (February 2022): the Italian authority "rewarded" the company's data processing activities with a fine of €20 million,

- United Kingdom (May 2022): £7.5 million fine to the company,

- Greece (June 2022): the Hellenic DPA also found the fine amount of EUR 20 million to be adequate in connection with Clearview AI's activities,

- France (November 2021, October 2022 and April 2023): the French authority found in 2021 that Clearview AI's operation is not in compliance with the GDPR and ordered the company to delete the unlawfully collected data, then imposed a fine of €20 million on Clearview AI in 2022 after the company failed to respond to the authority's request issued in 2021, and then, as Clearview AI continued to fail to comply with previous decisions, the CNIL imposed a further fine of €5.2 million (EUR 100,000 per day overdue for providing proof of compliance with the orders of CNIL),

- Austria (April 2023): the unlawful operation was also established by the Austrian authority, but - perhaps surprisingly - no fine was imposed.

In the above cases involving Clearview AI, the following major violations were typically found:

- lack of an appropriate legal basis (Article 6 GDPR),

- infringement of the provisions on processing sensitive data (Article 9),

- breach of transparency and data subject rights requirements (Articles 12, 13, 14, 15, etc.),

- failure to appoint a representative within the EU (Article 27),

- non-cooperation with the DPA (Article 31).

In addition to imposing fines, the authorities generally required the deletion of unlawfully collected data (images) and the termination of unlawful data processing activities. However, the repeated decisions of the French authority also show that, unfortunately, despite the obligations, the unlawful data processing practice has not been ceased by the company.

Besides the fines imposed on Clearview AI, some law enforcement bodies were also sanctioned due to the unlawful use of the services provided by Clearview AI:

- Sweden (2021): SEK 2,500,000 (approximately EUR 250,000) fine was imposed on the Police Authority for infringements of the Criminal Data Act by using Clearview AI to identify individuals,

- Finnland (2021): the use of Clearview AI's services were also found as unlawful.

The European Data Protection Board also higlighted in 2020 that "without prejudice to further analysis on the basis of additional elements provided, the EDPB is therefore of the opinion that the use of a service such as Clearview AI by law enforcement authorities in the European Union would, as it stands, likely not be consistent with the EU data protection regime." (see press release about the 31st plenary session of the EDPB)

4. Foodinho, Deliveroo

The proceedings against these two companies are also linked to the Italian authority. Foodinho was fined €2.6 million in 2021, while Deliveroo was fined €2.5 million, also in 2021.

In these cases, the authority made the following main findings:

- transparency requirements were violated,

- the principles of data protection by design and by default were not properly applied,

- data security deficiencies and lack of adequate technical and organisational measures,

- violation of requirements related to the exercise of the rights of the data subject, including the rights related to automated decision-making (Article 22 GDPR),

- lack of data protection impact assessment, etc.

5. Medical use for predictive purposes

Browsing through the above cases, it may come as no surprise that decisions on the "predictive medical application" of AI affecting three hospitals, each with fines of 55,000 euros, were also issued in Italy. (More information on the decisions in Italian is available here.) According to the information available, deficiencies in the processing of sensitive data (processing without an appropriate legal basis), inadequate information to the data subjects and failure to carry out the necessary impact assessment led to the fine.

6. Budapest Bank

The Hungarian DPA imposed a fine of HUF 250 million (approximately EUR 650,000) in 2022. The AI-based application was used to analyse conversations with the customer service and the DPA identified the following data protection gaps in connection with the processing of personal data:

- violation of data protection principles ("legality, fairness and transparency" and "purpose limitation", Article 5 (1) (a) and (b) GDPR),

- deficiences related to the legal basis of processing and data processing compatible with the original purpose (Article 6),

- non-compliance with transparency and information obligations (Articles 12 and 13),

- failures regarding the exercise of data subject's rights (in particular the right to object, Article 21),

- failure to apply appropriate technical and organisational measures (Article 24(1)),

- breach of the principles of data protection by design and by default (Article 25).

7. Conclusion

In connection with the above cases, it is clear that AI-related issues may increasingly come into focus in the application of data protection rules. Some authorities, especially the Italian DPA (Garante), are leading the way in appying the data protection rules to the use of AI-based tools and paying special attention to this area, but it is likely that more and more cases will become public in connection with the use of AI.

The above cases also indicate which requirements set out in the GDPR arise most often in connection with the use of AI-based solutions:

- requirements concerning legal basis,

- transparency and information obligations,

- the exercise of data subject's rights,

- carrying out or failing to carry out an impact assessment.

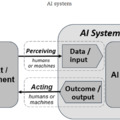

Data controllers and developers of AI solutions should pay close attention to the above issues in order to ensure data protection compliance. Of course, the list of potential concerns related to the use of AI systems goes far beyond data protection, so even before EU legislation on AI is adopted, it is essential that developers and users of AI systems act prudently and apply measures proportionate to the risks involved.