The concept of shadow IT is by no means new, it is a decades-old phenomenon that gained momentum with the advent of cloud services and the spread of increasingly advanced computing devices among a wide range of users (especially with the advent of smartphones, laptops, tablets - cf. the so-called "Bring Your Own Device", BYOD phenomenon, which also entailed serious internal regulatory challenges for businesses).

More recently, and mainly after ChatGPT became available in November 2022, we have become familiar with another subset of shadow IT phenomena: the threat of using "shadow AI", i.e. the use of unapproved, unverified artificial intelligence (AI) applications.

What do the numbers show?

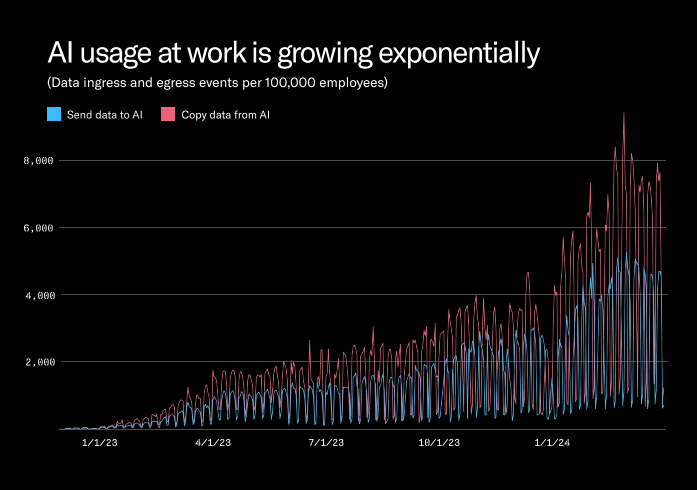

According to a recent report (May 2024) published by cyberhaven labs, the use of AI applications in the workplace increased by 485% between March 2023 and March 2024, with solutions from three major providers (OpenAI, Microsoft, Google) dominating the market.

Source: cyberhaven labs: AI Adoption and Risk Report, 2024Q2, p. 3

What exactly is "shadow AI"?

Similar to the phenomenon of shadow IT, "shadow AI" meansthe unapproved, uncontrolled use of various – typically cloud-based – artificial intelligence services. In these cases, employees use AI-based applications without company approval or knowledge, typically with their own accounts, but also for work purposes, often providing employer information (which may include protected, sensitive business information and personal data). Since data is not uploaded to verified, approved corporate accounts and in a secure corporate environment, corporate data assets and trade secrets are also at significant risk. (You may recall that in May 2023, Samsung was forced to impose a ban on employee use of ChatGPT and other AI apps due to a previous data leak caused by improper use.)

What company data is affected?

When shadow AI is used in the workplace, internal information, trade secrets, confidential documents and personal data processed by the company may also be compromised.

An interesting picture emerges based on data from which areas (HR, customer relations, marketing, legal, etc.) "end up" uncontrollably at different AI service providers, and what proportion of this data is uploaded through corporate and non-corporate (typically the user's own) accounts. This can give us an idea of which departments are particularly likely to use shadow AI. (According to the data in the report, e.g. the legal domain is "responsible" for the use of 2.4% of sensitive information in AI-systems, but more than 80% of this "lands" at the service provider through non-corporate accounts. See pages 8-9 of the report.)

What are the dangers of "shadow AI"?

The main threats and risks regarding shadow AI are primarily the same as in case of the improper use of IT systems and shadow IT, such as the risk of leakage of sensitive corporate data (trade secrets) and personal data, risks arising from non-compliance with applicable regulations, data loss, increased exposure of the corporate IT environment to attacks, and related reputational and financial risks.

However, there may also be AI-specific risks, such as access to enterprise data to train AI models (larger providers increasingly provide enterprise versions of their AI applications, where they typically agree not to use corporate data that users may provide to train their models, e.g. ChatGPT Enterprise), which can then lead to threats in the context of AI-specific attacks (e.g. prompt injection attacks).

In connection with the risks associated with non-compliance, it is worth highlighting the risk of a breach of data protection rules, which was recently explicitly highlighted by the Dutch data protection authority in connection with the use of AI chatbots. The Dutch Data Protection Authority's press release refers precisely to cases that may relate to the unauthorised and uncontrolled use of AI systems (i.e. shadow AI).

It is also worth bearing in mind that compliance with the AI Act, which entered into force on 1 August (and will become applicable in several steps), can only be conceived if organisations using AI systems are aware of which AI systems are used by employees and for exactly what purpose.

What can be done to mitigate the risks of shadow AI?

Of course, as with shadow IT in general, there are several measures that can be used to fight against "shadow AI". Typically, a complete ban is not a solution, as it can lead to the unauthorized use of AI solutions by employees (since their use has many advantages, it can make life easier for employees, improve productivity and efficiency, and often make work more diverse and interesting).

Taking the following measures could help avoid the use of shadow AI (and shadow IT in general):

- raising awareness,

- increasing AI literacy (and digital literacy) among employees;

- establishing appropriate internal regulatory frameworks (e.g. the checklist for the use of LLM-based chatbots issued by the Hamburg Data Protection Commissioner can be used as a point of reference for the use of chatbots);

- establishing and consistently applying appropriate internal control processes,

- monitoring the available IT and AI solutions, and after the necessary preparation, making those tools available that are facilitating the work (of course, the release of such tools must be subject to appropriate security guarantees and operating conditions*), and as part of this monitoring activity, collecting continuously feedbacks regarding the needs of employees in relation to their work and the tools that can contribute to their efficiency and productivity, which can then be translated into specific IT and AI needs.

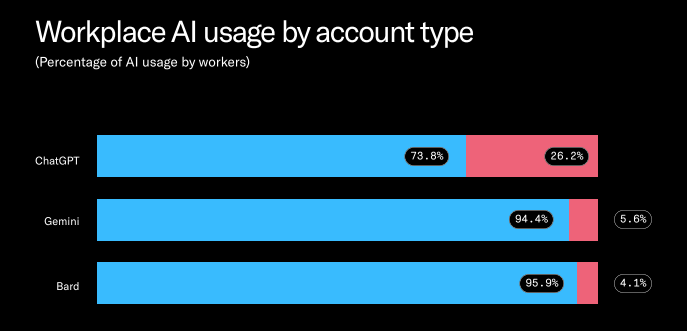

* Of course, it is a major challenge to follow the rapid developments in AI services and applications. A good example of this is the data from the report cited above, according to which in the case of ChatGPT, approximately 74% of employees use the device with their own account, i.e. they do not use the "Enterprise" version, but in case of newer AI services such as Bard or Gemini, the situation is much worse, because in their case the share of corporate accounts is negligible, around 5% (not to mention the mushrooming solutions offered by startups or smaller service providers):

Source: cyberhaven labs: AI Adoption and Risk Report, 2024Q2, p. 8

Even if companies cannot be fully up-to-date, it is worth trying to track trends to prevent the wider use of unauthorized AI solutions, and it is important that expectations towards employees are designed and communicated in such a way that they do not encourage the use of "shadow AI".