From the point of view of the application of the AI Act, the question of how to interpret the definition of AI systems is a crucial question. Therefore, in this post, I will examine what can be understoodunder the concept of an AI system under the AI Act and how an AI system can be distinguished from a 'traditional' IT system.

1. The "history" of the definition of an AI system in the AI Act

The definitions of AI and AI systems used by different legislations, proposals and recommendations show significant or sometimes, less significant differences (a very good comparative selection of different definitions is available here, and another comprehensive overview of AI definitions and AI taxonomy from 2020 is available here).

One definition, which is the starting point for definitions of AI systems in several major pieces of legislation adopted or in the process of being adopted, is the definition adopted by the OECD in 2019 and amended on 8 November 2023. This definition was also taken as a basis by the European Parliament when it proposed the definition in the draft AI Act (rejecting the somewhat cumbersome version originally proposed by the Commission) and essentially became the official definition of the concept of AI system in EU-wide legislation based on the adopted text of the AI Act.

The recitals to the AI Act also underlined that consistency with the work of international organisations was explicitly intended in the creation of the notion: "The notion of ‘AI system’ in this Regulation should be clearly defined and should be closely aligned with the work of international organisations working on AI to ensure legal certainty, facilitate international convergence and wide acceptance, while providing the flexibility to accommodate the rapid technological developments in this field. [...]" (Recital 12, emphasis added)

(The US President's Executive Order on AI also contains a definition very similar to that in the OECD recommendation. Furthermore, the first binding international treaty on AI, the Council of Europe adopted in May 2024 and opened for signature in September 2024, entitled "Council of Europe Framework Convention on Artificial Intelligence and Human Rights, Democracy and the Rule of Law", contains a similar definition.)

The definition of the AI system (as updated in 2023) according to OECD is as follows:

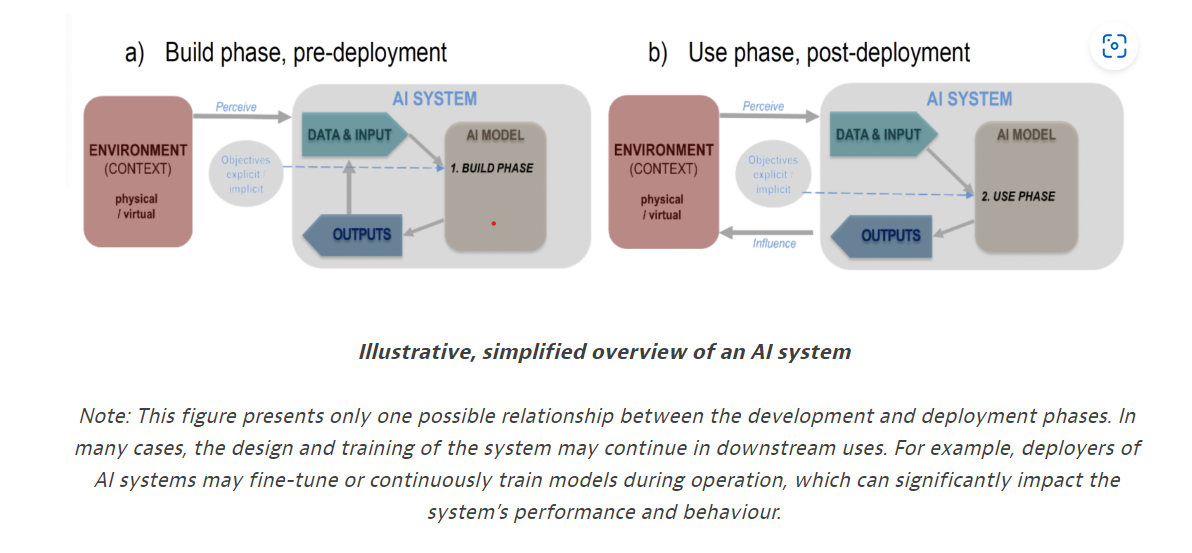

An AI system is a machine-based system that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments. Different AI systems vary in their levels of autonomy and adaptiveness after deployment.

Source: OECD (https://oecd.ai/en/ai-principles)

(See my previous post regarding the changes of the OECD definition in 2023 here.)

2. The notion of an AI system in the AI Act

According to the AI Act, an AI system means

- a machine-based system

- that is designed to operate with varying levels of autonomy and

- that may exhibit adaptiveness after deployment, and

- that, for explicit or implicit objectives, infers, from the input it receives, how to generate outputs such as predictions, content, recommendations, or decisions

- that can influence physical or virtual environments.

(See Article 3(1) of the AI Act for the definition.)

As I wrote earlier, for the requirements regarding AI systems to apply, it is of course necessary that the system falls within the scope of the above definition. In connection with the above conceptual elements, the question is how AI systems can be distinguished from "traditional" IT systems, since some of the above elements also apply to “traditional” IT systems. From the point of view of distinguishing AI systems from "traditional" IT systems, the operation with varying levels of autonomy may be an essential element, since a system operating entirely as a rule-based system and showing no autonomy at all cannot qualify as an AI system, so the AI Act does not apply to it either. Adaptiveness may also be relevant, but it is only a possibility ("may demonstrate adaptiveness"), so it is not an essential element of the definition of AI systems.

The issue of differentiation from "traditional" IT systems is also addressed in the recitals to the AI Act:"[...] the definition should be based on key characteristics of AI systems that distinguish it from simpler traditional software systems or programming approaches and should not cover systems that are based on the rules defined solely by natural persons to automatically execute operations. [...]" (Recital 12, emphasis added)

[Update (07.02.2025): The Commission published guidelines on AI system definition to facilitate the first AI Act’s rules application.]

Let's look at the elements of the notion one by one.

2.1. A machine-based system

According to the recitals to the AI Act, "[t]he term ‘machine-based’ refers to the fact that AI systems run on machines." (Recital 12, emphasis added)

As an element of the notion, "machine-based" therefore only refers to the way how these systems are run and has no real distinctive effect per se compared to "traditional" IT systems. Due to the lack of real distinctive effect, articles and analyses discussing the definition do not specifically address this element either.

Perhaps it is also worth noting here in relation to AI systems that they are "[...] AI systems can be used on a stand-alone basis or as a component of a product, irrespective of whether the system is physically integrated into the product (embedded) or serves the functionality of the product without being integrated therein (non-embedded)." (See Recital (12), emphasis added.)

2.2. Operating with varying levels of autonomy

The second element of the concept, "operating with varying levels of autonomy", is the most important part of the definition, since it is perhaps the most tangible differentiation from "traditional" IT systems.

What does autonomy mean?

To grasp the concept of "varying levels of autonomy", we first need to look at what the notion of "autonomy" means in relation to AI systems.

According to the recitals to the AI Act: "AI systems are designed to operate with varying levels of autonomy, meaning that they have some degree of independence of actions from human involvement and of capabilities to operate without human intervention." (See Recital 12, emphasis added)

According to an explanation of the OECD definition:

AI system autonomy means the degree to which a system can learn or act without human involvement following the delegation of autonomy and process automation by humans. Human supervision can occur at any stage of an AI system’s lifecycle, such as during AI system design, data collection and processing, development, verification, validation, deployment, or operation and monitoring. Some AI systems can generate outputs without specific instructions from a human.

Autonomy, as stated above, essentially refers to the absence of human intervention or to a certain degree of it. In practice, it may be important to distinguish autonomous operation from automated operation, since the latter may also be able to take place without human intervention (in fact, this can be its essence), but in the case of automated operation, the system operates according to predetermined steps and in order to achieve a consequent output (result), while in the presence of autonomy to varying degrees, operation cannot be completely predictable and deterministic.

2.3. After deployment, it may exhibit adaptiveness

According to the recitals to the AI Act, "The adaptiveness that an AI system could exhibit after deployment, refers to self-learning capabilities, allowing the system to change while in use." (See Recital 12, emphasis added)

The existence of adaptiveness can also be an important differentiation element, but it is important to reiterate that this is only a possible element of the definition, i.e. the absence of adaptiveness does not itself mean that the system is not an AI system.

Adaptiveness is a characteristic element of some AI systems, as the recitals links this with self-learning capabilities. Similarly, the OECD's explanation of the AI system definition refers to this as a feature of machine learning. Thanks to this ability to self-learning after deployment, such AI systems can draw new conclusions that were not originally planned during development. The existence of this feature is not so much a definition element but it highlights the importance of ensuring that requirements for AI systems that demonstrate adaptiveness reflect changes over time and that the safe and proper functioning of the system should not be compromised throughout its lifecycle.

Examples include a speech recognition system that adapts to an individual’s voice or a personalised music recommender system, as explained by the OECD to the definition.

2.4. For explicit or implicit objectives, infers, from the input it receives, how to generate outputs

The recitals to the AI Act highlights the ability to inference as an important feature of AI systems: "[...] A key characteristic of AI systems is their capability to infer. This capability to infer refers to the process of obtaining the outputs, such as predictions, content, recommendations, or decisions, which can influence physical and virtual environments, and to a capability of AI systems to derive models or algorithms, or both, from inputs or data. The techniques that enable inference while building an AI system include machine learning approaches that learn from data how to achieve certain objectives, and logic- and knowledge-based approaches that infer from encoded knowledge or symbolic representation of the task to be solved. The capacity of an AI system to infer transcends basic data processing by enabling learning, reasoning or modelling. [...]"

"The reference to explicit or implicit objectives underscores that AI systems can operate according to explicit defined objectives or to implicit objectives. The objectives of the AI system may be different from the intended purpose of the AI system in a specific context."

(See Recital 12, emphasis added)

The inference is based on inputs. The inputs can come from humans or even from machines, since the definition does not contain any restrictions in this regard. The definition does not contain any restriction regarding the definition of explicit and implicit objectives, and here it is not relevant how and by whom the objective setting takes place. (The earlier version of the OECD definition explicitly referred to human-defined objectives, but this excluded from the category of AI systems those for which the objective-setting was not human-derived.)

2.5. The outputs can influence physical or virtual environments

According to the recitals to the AI Act, "... environments should be understood to be the contexts in which the AI systems operate, whereas outputs generated by the AI system reflect different functions performed by AI systems and include predictions, content, recommendations or decisions. [...]" (See Recital 12, emphasis added)

The ability of influencing ("can influence") is sufficient, not necessary, for actual change to occur in either the virtual or physical environment as a result of the outputs.

Other posts in the "Deep Dive into the AI Act" series: